How L.A. Noire Conquered The Uncanny Valley With Aussie Technology Depth Analysis (Gizmodo Republish)

With the shuttering of Gizmodo Australia, I’m pulling a few of my favourite articles over here for safekeeping. Hopefully this won’t upset any of the Copyright Gods, as I don’t own the Copyright. But given the current owners are shuttering the publication I want to make sure this stuff isn’t lost forever.

Originally Published: December 17, 2010 at 8:00 am

From the moment Rockstar unveiled their trailer for upcoming detective thriller, L.A. Noire from Aussie developers Team Bondi, we’ve known that the bar for motion capture in video games has not only been raised, but sent through the roof. Animators have been flirting with the edge of the uncanny valley for years, but now using some incredibly intelligent and advanced technology, the guys from Team Bondi have created the 21st century version of Pinocchio, turning digital game characters into realistic, animated people. A few weeks ago, we got to see the technology behind this amazing step forward up close.

“Match me, Sydney”

– Sweet Smell of Success, 1957

You’d never know it from the outside of the nondescript building in Culver City in Los Angeles, but the US home of Australian company Depth Analysis houses some of the world’s most advanced motion capture technology. Dubbed “MotionScan”, the technology is a combination of high definition facial scanning and intelligent algorithms that can capture high definition video of an actor’s face in stunning, true-to-life detail, and then convert it to a virtual three-dimensional head for placement within the game.

The story of how this Australian technology came to be is almost as impressive as the end result. The brainchild of Team Bondi founder Brendan McNamara, Depth Analysis began its life back in 2004 as a small research project. According to Rockstar VP of Development Jeronimo Barrera, it was born from a longing to capture more detail in the motion capture studio.

“Brendan had a lot of experience with motion capture and he wanted to be able to capture performances, meaning the face. Doing traditional motion capture you’re capturing the bones, and he didn’t want to capture what was on the inside, he wanted to capture what was on the outside. So they came up with a plan and he and Oliver Bao developed MotionScan, which is now called Depth Analysis.”

The road from research project to functional technology has been a long one. Despite the fact Team Bondi and Depth Analysis have been developing the Motionscan technology since 2004, it was only this year that they actually began filming actors in the studio for L.A. Noire. Since January, more than 400 actors have been put through the Depth Analysis capture room and more than 2200 pages of script have been acted out for the game. Aaron Staton, who plays the game’s lead protagonist, Cole Phelps, has put in more than 80 hours of MotionScan filming himself.

“Louie, this could be the beginning of a beautiful friendship”

– Casablanca, 1942

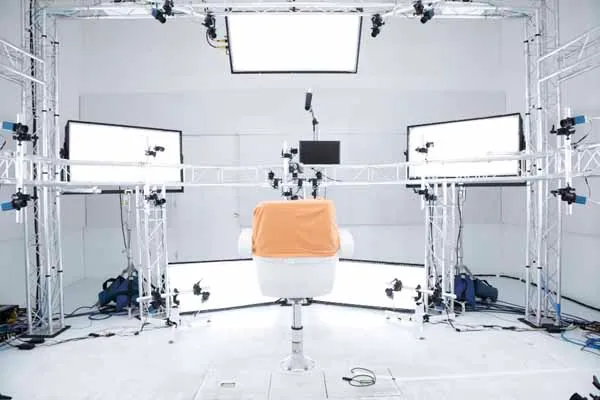

At the centre of the seemingly magical setup that makes the entire scanning process possible is a soundproof and lightproof room, kitted out with a very precisely aligned 32 camera rig in a 360 degree arrangement around the actor’s central chair. Like a scene from Kubrik’s 2001: A Space Odyssey, the room is uniformly lit in a bright, sterile white light, to ensure that there are absolutely no dark spots on the actor’s face. Having a well lit subject is essential to the technology working.

The 32 Machine Vision cameras are very precisely positioned in pairs around the chair, like numbers around a roulette wheel. Even the slightest bump of the rig could offset the alignment, and fixing it can take up to four hours for each pair. The setup – which was moved from Sydney to LA over the Christmas holiday period last year – took Head of Research and Development at Depth Analysis Oliver Bao over ten 16-20 hour days to install the 1000-plus pieces in the rig. Each $6,000 camera is capable of sending 1080p video at 30 frames per second directly to the server room simultaneously, which can then be turned into a status scan in as little as 15 minutes. Bao tells us they’re the same cameras NASA uses during space shuttle launches to give them a few seconds of footage before melting away.

Each camera is fixed focused on the actor, who has to sit extremely still and act with their face – there’s only about 50cm worth of leeway for head movement during the acting process – which makes it a tough proposition for the actors involved. One of the main reasons the studio was moved from Sydney to LA was because many of the Australian actors being used in the early days struggled to manage the US accent while sitting extremely still. While on the chair, the actors have to wear an orange shirt with three green balls attached, which act as reference points for the development team back in Sydney when they add the Depth Analysis footage to the motion captured acting done at a separate facility.

Each camera is fixed focused on the actor, who has to sit extremely still and act with their face – there’s only about 50cm worth of leeway for head movement during the acting process – which makes it a tough proposition for the actors involved. One of the main reasons the studio was moved from Sydney to LA was because many of the Australian actors being used in the early days struggled to manage the US accent while sitting extremely still. While on the chair, the actors have to wear an orange shirt with three green balls attached, which act as reference points for the development team back in Sydney when they add the Depth Analysis footage to the motion captured acting done at a separate facility.

“It’s very streamlined. We get geometry, colour, plus body orientation and voice, and it’s all recorded in one go. [The dots]are to track where your vertebrae is, so you know where the neck join is in animation, we use those three points to deduct it, And once it’s found, we just plunk the head onto an animation like a Lego head” explains Bao.

In addition to the 32 camera setup, there’s a 33rd camera that connects directly to an adjacent room for the director to watch the performance in real time and a screen which can display the script for the actor, play back footage or allow the actor to see the director in the next room. A lapel microphone and reference mic makes sure the actor’s voice is properly captured without any popping or background noise.

In addition to the 32 camera setup, there’s a 33rd camera that connects directly to an adjacent room for the director to watch the performance in real time and a screen which can display the script for the actor, play back footage or allow the actor to see the director in the next room. A lapel microphone and reference mic makes sure the actor’s voice is properly captured without any popping or background noise.

Considering the amount of data coming in from 32 FullHD cameras, the server setup in Depth Analysis is breathtaking. Nine servers, each capable of 300MB/s speeds, are used for the video recording (although the ninth server is redundant), while a 45TB buffer sits alongside the server tower, working away at 650MB/s. Footage is captured to the video server, then moved to the buffer after it’s been edited, before being copied to tape and sent back to Sydney to be processed.

“Experience has taught me never to trust a policeman. Just when you think one’s all right, he turns legit”

– The Asphalt Jungle, 1950

The Depth Analysis setup is capable of capturing 50 minutes worth of footage in a day. That may not sound like a lot, but compared to a traditional motion capture setup’s 10 to 15 minutes, it suddenly becomes a much more efficient venture. It also completely does away with the need to animate a character’s face.

“We basically just select the processing configurations, the presets, and just let it go. So there’s no character artists or animators touching up the face. We just hand it over to them to put onto the body and do the head orientation adjustments. So basically we just cut out traditional animation – it’s a lot faster this way,” explains Bao.

The end result is not just faster – it’s also breathtakingly detailed. The team from Rockstar showed us a level from L.A. Noire called “The Fallen Idol”, a case about a B-Grade actress and her 15 year old niece surviving an attempted murder at the hands of a movie producer. Just like every crime show you’ve ever watched on television, as the evidence comes to hand it becomes clear that there is more at play than just an attempted murder… There’s blackmail, rape, underground pornography and organised crime all mixed up in the case.

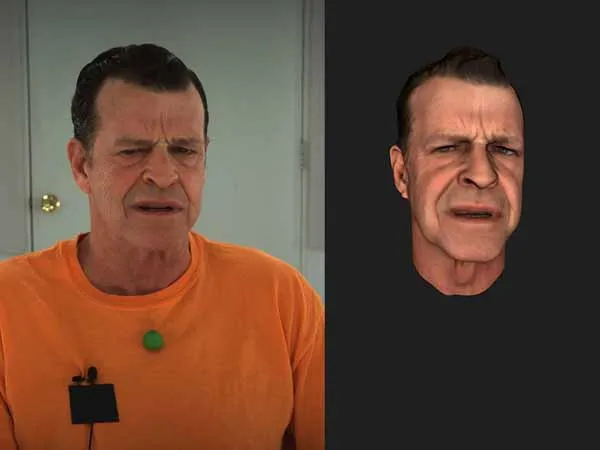

But what’s most striking about the mission as it was played out – and what makes LA Noire the ideal launch vehicle for a technology like MotionScan – is that the act of interrogating witnesses and suspects relies so much on the performance of the actors, and how that is shown on screen. The subtlest shift of the eyes from the actress; the slightest twitch in the character’s voice or the smug tip of a curved lip all influence your decision as a player as to whether or not you are being lied to. Unlike any video game that has ever come before it, using these facial cues is a necessary part of the gameplay, and it feels natural because the expressions are so natural.

Through either fortuitous timing or a part of some Rockstar masterplan, the Depth Analysis studio was actually reshooting scenes from The Fallen Idol mission on the day of the tour. Sitting in the reception area waiting to be shown the technology that makes the whole operation possible, an attractive young girl with a cut across her right eye walked past. The immediate thought was that she looked familiar. It took a few seconds, but eventually we realised that it was the actress who played the teenage girl, Jessica Hamilton, in The Fallen Idol mission of the game. In a city like LA you expect to see some celebrities, but you don’t necessarily expect to recognise video game characters. As the tour progressed and we watched her refilm her lines from that episode, it was an amazing just how lifelike the in-game character actually was.

An even better indication of the detail captured by the Motionscan process was watching a scene from the game being acted by a floating head – captured from an actor in the Depth Analysis chair and rendered on a screen. One scene in particular, where the character seems unsure about whether or not he actually killed someone the previous evening shows the true power of the technology. By capturing a real actor’s performance, you can see every nuanced expression, from the confused sadness in his eyes, to the quivering voice and trembling lips as he considered the weight of what he may have done, played out on a computer monitor by a floating head that could be manipulated in real time.

Thanks to Depth Analysis, L.A. Noire is already blurring the lines between video games and cinema, which is far from an easy task, as Jeronimo Barrera explains:

“We’re trying something new that’s never been done. We’re not just releasing a game – everybody looks at this who hasn’t seen it in person, even from the screenshots you look at it and go, ‘Oh, it’s GTA with Fedoras on’. But the reality is that it’s a whole new different concept, it’s a whole new way of looking at interactive entertainment. I think we’re starting to blur the lines between a television program and a video game.”

“I caught the blackjack right behind my ear. A black pool opened up at my feet. I dived in. It had no bottom.”

– Murder, My Sweet, 1944

One surprising aspect of the Depth Analysis scans in L.A. Noire is that they are pretty heavily compressed. After showing a line played out by the floating head of Aaron Staton, Oliver Bao explains:

One surprising aspect of the Depth Analysis scans in L.A. Noire is that they are pretty heavily compressed. After showing a line played out by the floating head of Aaron Staton, Oliver Bao explains:

“The raw image is actually much better quality. It’s suitable for film and post-production use. So it’s not limited to just games – This actually started as a capture for cutscenes, but we ended up using it for full game face. At the time, nobody thought this was actually possible, and nobody knew how to use it. So once it was working, people started thinking how they could actually make use of it and that became part of the core gameplay”.

Given that the technology used for L.A. Noire is so advanced and offers such a new level of immersion to gameplay, you may want to assume that it is the future direction of all games from Rockstar. Not so, according to Barrerra:

“Are we going to use MotionScan in every game? Probably not. But where it fits we will definitely use it.

“It’s kind of a hard thing to do because you look at this thing and you instantly go, ‘Oh, they’ve got this new tech, so they’re gonna do some gimmicky tech demo game’, and it’s not at all what this is. It helps, and it’s there, but it’s also the amazing writing by Brendan, it’s [Head of Production and Design at Team Bondi, Simon Wood] ’s amazing eye for detail, it’s all the artists, all the animators… It’s been a huge collaborative effort to make this thing.”

But given that LA Noire is just the first practical implementation of MotionScan, the future looks pretty bright for the small Australian company.

“We’ve only got two senior programmers and one junior programmer at this stage – it’s a very tiny team. Basically the three of us pretty much created the whole lot,” explains Bao.

“[We’re] all Australian… for now. We’re looking at setting up a permanent state studio here, and we’re also looking at doing more R&D work [in the US] as well, because we need to work more closely with clients, they need to have someone here that’s technical to work with them.”

“[We’re] all Australian… for now. We’re looking at setting up a permanent state studio here, and we’re also looking at doing more R&D work [in the US] as well, because we need to work more closely with clients, they need to have someone here that’s technical to work with them.”

Yet despite spending six years creating the technology and watching it slowly move from research project to a potentially game-changing motion capture technology, Bao still hasn’t subjected himself to the process.

“I refuse to. There’s nowhere to hide. Reality can be cruel and it’s not DA’s fault… That’s what I say”.

Yet for Rockstar and Team Bondi, the fact that there’s nowhere to hide will prove to be their greatest strength.